Shaky Savine & Doing Nothing with AI

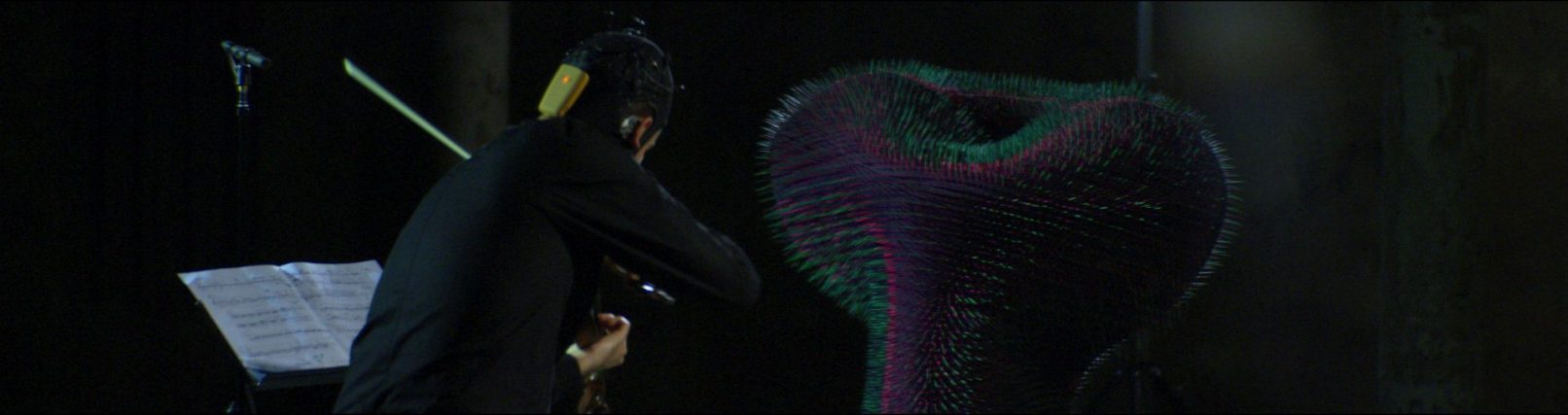

Shaky Savine & Doing Nothing with AI is a live performance of a solo violinist entering into an embodied duet with the neuroreactive robotic installation Doing Nothing with AI. While improvising the modular violin composition Shaky Savine in response to the robot’s evolving motions, the musician wears an EEG cap that captures their brain activity in real-time.

The installation analyses this neural data to isolate signals from the default mode network, an unconscious, decentralised brain activity associated with introspection and doing nothing. Guided by this input, the robot attempts to learn which movement patterns might reactivate the performer’s resting state. Rather than hearing the music, the robot listens to the performer’s mental rhythms and adapts its movements accordingly, influencing and responding to the performer’s unconscious doing nothing state. Yet, the focused act of improvising the violin composition naturally suppresses the doing nothing brain activity that the embedded AI seeks to elicit. This divergence of objectives becomes the creative engine of the work, generating a dynamic encounter in which human and machine co-compose in real time.

The performance critically explores how AI assemblages reshape our experience of idleness, introspection, and agency. It raises questions about authorship and embodied intention within human–machine collaboration. Rather than seeking seamless alignment, Shaky Savine & Doing Nothing with AI embraces the friction between human and machinic goals as fertile ground to reimagine doing nothing with AI as an active co-negotiation, challenging prevailing notions of productivity and inviting deeper reflection on the future of creative agency.

Shaky Savine & Doing Nothing with AI operates as a closed-loop system linking brainwave measurements, machine learning, generative robot control, and musical improvisation. The violinist’s brain signals are captured by an Enobio 8-channel electroencephalography (EEG) cap and streamed live to the installation’s computer. The brain signal data is processed to isolate patterns from the default mode network (DMN), an unconscious, decentralized brain network associated with introspection or “doing nothing”. Whenever we aren’t actively pursuing a task, this brain network starts to reflect our short-term memory with our long-term memory. This unconscious brain process updates our environmental and social reference values and expectations – an important task.

An industrial KUKA robotic arm wearing a garment produced by itself serves as the AI’s physical body (Link: Doing Nothing with AI). An embedded reinforcement learning (RL) agent controls the robot, treating the live DMN metrics as a reward signal. It continuously tweaks the arm’s motions to discover choreographies that nudge the performer’s mind toward the default mode state. The RL agent operates in real-time, updating movement every few milliseconds and effectively training itself live based on the performer’s unconscious DMN feedback.

The violin composition is divided into 10 short modules (each 10–30 seconds in length) that can be performed in any order and repeated or varied freely. This modular score allows the violinist to improvise and respond in the moment, rather than follow a fixed sequence. He can linger on a section or shift dynamics in reaction to the robot’s behavior, using his aesthetic judgment to shape the music to the machine’s “mood.” Conversely, the robot’s presence (its motion, sound, and proximity) influences the performer’s focus and emotional state, which in turn affects his playing and brain activity. Thus, a tight feedback loop emerges: human and machine continuously modulate each other’s input.

But as stated above, the setup poses a paradox: the violinist’s focused violin play naturally suppresses the very brain state (DMN) the AI is trying to evoke. The RL agent attempts to navigate this friction by exploring subtle cues or pauses that might let the performer’s mind wander momentarily. The tension between the human’s goal (to perform) and the machine’s goal (to induce rest) becomes the creative engine of the piece. Yielding an ever-evolving interplay unique to each performance.

In summary, the technical realization showcases a form of human–robot co-creation grounded in unconscious brain signals and conflicting objectives. The performer’s unconscious brain activity serves as feedback for generative robotic choreographies, enabling the human-machine ensemble to collaborate and co-shape this musical dialogue in real time.

More information on the embedded aesthetic human-machine-interaction, the neuroaesthetics topic of bottom-up perception as well as the utilization of EEG-feed generative adversarial networks for interactive art within the different “Doing Nothing with AI” iterations can be found in this open access publication by myself, Magdalena Mayer, and Johannes Braumann: https://dl.acm.org/doi/pdf/10.1145/3430524.3440647

Music

Armin Sanayei | composition

Jacobo Hernández Enríquez | violin

Alexander Yannilos | recording & sound mastering

Installation

Emanuel Gollob – design, concept & research

Magdalena May – concept & research

Johannes Braumann – robotic advice

Dr. Orkan Attila Akgün – neuroscientific advice

Movie

Anna Mitterer | director & cut

Philipp Windsor-Topolsky | camera & light

Tobias Aschermann | focus puller & DIT & colour grading

Aram Baronian | dolly grip

Hardware | KUKA industrial robot Agilus 2 | Enobio EEG Cap from Neuroelectrics

Software | Reinforcement Learning (RL) | vvvv gamma | Robot Sensor Interface | KUKA|prc

Acknowledgements | Supported by Vienna Business Agency | Parts of this iteration were produced at the Design Investigations Studio of the University of Applied Arts Vienna | The project was realized in a co-production between the Ensemble Reconsil and REAKTOR